I’m currently working on a project that involves face tracking, and as a first prototype am using the built-in features in the

I’m currently working on a project that involves face tracking, and as a first prototype am using the built-in features in the ARKit library, Apple’s augmented reality API for iOS.

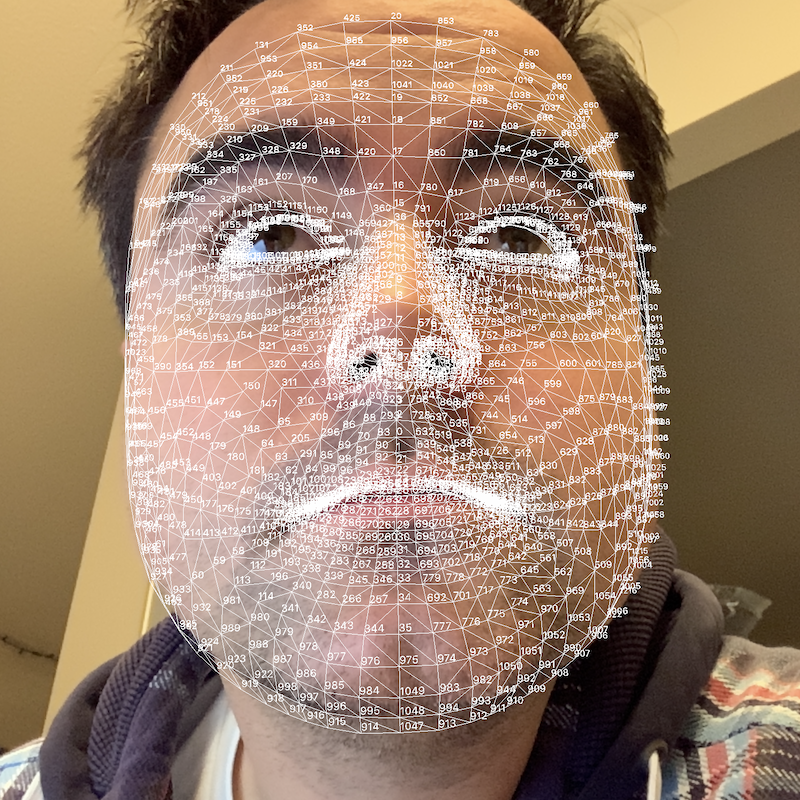

Using a device with a front-facing TrueDepth camera, an augmented reality session can be started to return quite a bit of information about faces it is tracking in an ARFaceAnchor object. One of these details is the face geometry itself, contained in the aptly named ARFaceGeometry object. For those who care about face shape landmarks, the 1220 individual vertices (points) can provide a wealth of information. However, there is little to no published information on which vertex corresponds to what point on the face.

While keeping in mind that this means these vertices could easily change in future versions of ARKit, I’ve taken the liberty of labeling points in case it is helpful for a future developer.

ARSession, ARFaceTrackingConfiguration, etc etc. It even goes so far as to attach SCNNode and child nodes that move with the face.

This is where Mittlefehldt stops short: he gives a few “magic numbers” corresponding to key points (eyes, nose, mouth, and hat) to attach emojis. To generate the maps above, I took this one short step further and generated a child node that puts the number itself on each node. If you’re reading through the tutorial, the difference is to add the following to your extension ViewController: ARSCNViewDelegate extension:

func renderer(_ renderer: SCNSceneRenderer,

nodeFor anchor: ARAnchor) -> SCNNode? {

guard let device = cameraView.device else {

return nil

}

let faceGeometry = ARSCNFaceGeometry(device: device)

let node = SCNNode(geometry: faceGeometry)

for x in [1076, 1070, 1163, 1168, 1094, 358, 1108, 1102, 20, 661, 888, 822, 1047, 462, 376, 39] {

let text = SCNText(string: "\(x)", extrusionDepth: 1)

let txtnode = SCNNode(geometry: text)

txtnode.scale = SCNVector3(x: 0.0002, y: 0.0002, z: 0.0002)

txtnode.name = "\(x)"

node.addChildNode(txtnode)

txtnode.geometry?.firstMaterial?.fillMode = .fill

}

node.geometry?.firstMaterial?.fillMode = .lines

return node

}

func renderer(_ renderer: SCNSceneRenderer,

didUpdate node: SCNNode,

for anchor: ARAnchor) {

guard let faceAnchor = anchor as? ARFaceAnchor,

let faceGeometry = node.geometry as? ARSCNFaceGeometry

else {

return

}

for x in 0..<1220 {

let child = node.childNode(withName: "\(x)", recursively: false)

child?.position = SCNVector3(faceAnchor.geometry.vertices[x])

}

faceGeometry.update(from: faceAnchor.geometry)

}

You can replace the list of vertex numbers with anything you’d like. The images above include all vertices, followed by every 4th vertex to clear out particularly “busy” areas.

hi could you explain the code to find out each vertex point?

Hi Kapil,

I’ve updated the post to give a bit more guidance. Hope this helps!

Hi, did you follow the emoji tutorial throughly? I tried to implement you suggestion code. I only got the mesh but with no vertiex shown.

Hi Andrew,

Wow; I am so sorry! When I updated this post to include the code to add vertices to the ARSCNView, I neglected to add the code that updates their position. That is now included. So sorry to make you struggle and wait for this!

John

thanks man , u help me alot ❤️

This is a very interesting, thanks for sharing. Is it possible to get the distance between two vertices, say you wanted to get the distance of the path from the left bridge of the nose to the right?

Yes of course. These points are SCNNodes, which have the property position, which is a SCNVector3 (a three-float vector). Simple geometry would say you can get the real-world distance between two nodes by subtracting their respective position vectors and taking the magnitude of the resulting vector. See for example this StackOverflow question.

This is fantastic example. Thanks so much! Can we approximately or accurately measure the distance between left ear and right ear SCNNodes and vertical distance of the face of a user using this mesh?

Yes, approximately anyway. See the reply to the comment above.

This is a great example but I do have a question. Is it possible to display only the mesh without the face? If yes, I would be grateful if you could help. Thank you.

hey, do you have the wireframe with the vertexes. i need to know exact position of those 1220 vertex.

This helps a lot. Amazing example.

How can I calculate the “Bitragion frontal arc” using this nord?

is it possible to track and anchor full side face profile? from the side?

In my brief testing, the face model does not acquire from the side. That said, my testing was long ago, and Apple’s approach may have changed since. (And of course, you could train a different machine learning model to do something similar with face profiles. This is just the one Apple has bundled in iOS.)

Hi John,

Thanks for sharing these amazing examples. I have two questions.

Do you know the reason why point 0 is determined there?

Do teeth or gums have anything to do with determining this point?

Thank you,

Okan AYDIN

Hi Okan, so sorry, but the points were entirely defined by Apple, and to the best of my knowledge the rationale isn’t documented anywhere.

I didn’t find either, thank you.

hi jonh i want to know if i can use for a jaw motion divice ?

I don’t see why not.